Two prominent informatics examples of gamification are in bioinformatics. Two groups of researchers have created games users play to help them solve their research problems. The oldest is FoldIt where users "fold" proteins. The user with the highest score wins (and has helped the researchers find the best 3D structure corresponding to a particular protein). So there's crowdsourcing going on here, too.

Another example is Phylo where users help the researchers perform multiple sequence alignments (MSAs). A lot of bioinformatics reduces down to aligning or pairing up symbols that stand for different things (amino acids in protein folding like FoldIt, nucleic acid in Phylo). In fact, the intro to the Phylo game (the onboarding part) is a really good tutorial on sequence alignment. It's easy for the computer to align 2 sequences by matching up symbols to maximize a score. It gets tougher for MSAs b/c the computer needs a good set of heuristics (or simplified rules) to make the problem solvable quickly. People can try to out-do the computer on an MSA since people aren't bound by the heuristics. They can spot patterns or try to solve the problem differently.

Both of these examples are full-blown games that use computing to solve a biology problem. That can be gamification, but gamification doesn't require that something be turned into a full-blown game. It only requires that game elements be incorporated. This last example (I finally got some Freakonomics content into this course) is a blog post illustrating the gamification of identifying objects in pictures. We've seen that computers are not very good at this problem but people are. One approach we saw for solving this was to make it an MTurk HIT and pay someone to do it. Here, we see that just adding a score essentially gets people to solve the problem for free. That's gamification.

(I'll sneak in one last link. Here's how one website gamified itself.)

Informatics Systems

Search This Blog

Monday, April 9, 2012

Monday, March 19, 2012

Data Mining

This post on boingboing describes an effective way Target is using data mining -- to predict who's pregnant or not based on their purchases. The original article appeared in the New York Times and can be found here. The boingboing article describes the data mining as "creepy", while this post at the abbot analytics blog is a little more on the side of the informatics. In either case, Target has mined the data about customers buying habits to figure out with good confidence which customers are pregnant.

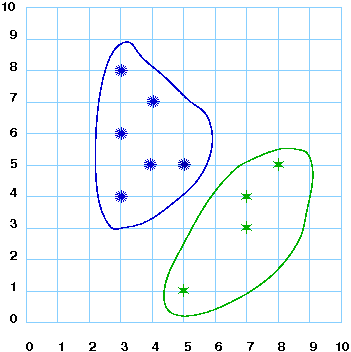

Clustering is one of the core parts of data mining. In clustering, a set of points are split into clusters where each cluster presumably contains related data. The article for the bottom picture is a little bit technical, but it describes how to cluster users based on musical preferences.

In classification, given some data, you want to classify that data. So based on its attributes, you want to know which group it goes in. Clustering works with all points and finds groups. Classification works when you know all the groups and want to add a point. They go hand in hand.

Clustering is one of the core parts of data mining. In clustering, a set of points are split into clusters where each cluster presumably contains related data. The article for the bottom picture is a little bit technical, but it describes how to cluster users based on musical preferences.

In classification, given some data, you want to classify that data. So based on its attributes, you want to know which group it goes in. Clustering works with all points and finds groups. Classification works when you know all the groups and want to add a point. They go hand in hand.

Wednesday, February 29, 2012

Tim Berners Lee

Tim Berners Lee invented the Web in some sense, as we know. In this recent Wired article, that is expanded on a bit in the context of courtroom testimony. He is also on the record as saying that perhaps World Wide Web wasn't the best name since the structure of the web is really that of a graph, so the acronym GGG (Giant Global Graph) might be more appropriate. Here's his blog post originating that idea. A graph (also called a network sometimes), is a mathematical model that fits lots of the digital aspects of our lives we take for granted: online friendships, the web itself, viral videos, and on and on...

Here is the Internet shown as a graph:

and here is Facebook

Here is the Internet shown as a graph:

and here is Facebook

Monday, February 13, 2012

Nasa has unplugged its last mainframe. That's one picture of the direction computing has been headed the last 30 years, but mainframes still exist to server other needs more closely related to enterprise-level data processing. Don't worry, though, NASA still has some big computer clusters.

Two slashdotted articles in a row related to I101 this section: the shift to IP is freeing up real estate for old companies like AT&T, and the importance of supercomputers to weather prediction has been highlighted.

Two slashdotted articles in a row related to I101 this section: the shift to IP is freeing up real estate for old companies like AT&T, and the importance of supercomputers to weather prediction has been highlighted.

Wednesday, February 8, 2012

Here's a cheesy video that describes how the Internet's backbone made of TCP/IP works:

A timely Wired article describes the importance of API's to the Web. Every wonder how websites all over the place let you share things on FaceBook recently?

Wednesday, February 1, 2012

Cyberinfrastructure

Cyberinfrastructure has become a buzzword for the infrastructure needed for sophisticated information systems. A recent article that got slashdotted describes one aspect of the infrastructure for a Facebook data center: the power. The data center in Oregon uses half the total power of the county, and expanding it involves working with the power company to add additional capacity to their power grid. Why would the power company put up with that? Because the economic value to the local economy is tens of millions of dollars? In terms of Facebook's value, they are rumored to be filing for an IPO today.

The world's fastest computer are listed in the Top 500. These information systems are at the cutting edge of high performance computing (HPC). The current shape that HPC has taken is a direct consequence of Moore's Law and the basic physics that things heat up faster at higher frequencies. As components on a chip get closer together every 18-24 months, they also used to be running faster. By running faster, they heat up more, but if they're too close together, all that heat doesn't have anywhere to escape to in time. So CPUs don't really get faster any more. One of the future entries at or near the top in the top 500 is not too far from here: Blue Waters is finally getting installed at NCSA in Illinois.

The world's fastest computer are listed in the Top 500. These information systems are at the cutting edge of high performance computing (HPC). The current shape that HPC has taken is a direct consequence of Moore's Law and the basic physics that things heat up faster at higher frequencies. As components on a chip get closer together every 18-24 months, they also used to be running faster. By running faster, they heat up more, but if they're too close together, all that heat doesn't have anywhere to escape to in time. So CPUs don't really get faster any more. One of the future entries at or near the top in the top 500 is not too far from here: Blue Waters is finally getting installed at NCSA in Illinois.

Wednesday, January 25, 2012

Ubiquitous Information

Google has made a new "privacy announcement" (outlined here in the Washington Post) stating that users can now expect to have data about their usage of all Google products tracked. Previously, a user's search usage and a user's usage of other Google products would have been tracked separately. Now, Google is stating that the data collected from using the separate Google products (search, GMail, YouTube, ...) will be aggregated to yield a better pool of information. Google is not giving users the ability to opt out of this data collection.

What benefit does this have for Google? What benefit does this have for the user? What drawbacks might it have for both?

Despite sensationalistic articles or headlines, data collection is everywhere; it is ubiquitous. What kind of data might Amazon collect about a user, for instance? When one speaks of ubiquitous computing, though, one usually means the computers and the network being everywhere rather than the data collection. Smart phones are a sign that computers are becoming ubiquitous since one can be online with them almost anywhere or anytime. Another way in which computers can be ubiquitous is in the field of sensor informatics. Sensors collect data, and when integrated with a computer, they can store this data or transmit it over a network. If sensors are ubiquitous, they will be sensors everywhere. What applications of this can you think of? Using ubiquitous hardware to solve problems with this computing power is informatics--sensor informatics. Wired has one sample application which may be viable soon.

What benefit does this have for Google? What benefit does this have for the user? What drawbacks might it have for both?

Despite sensationalistic articles or headlines, data collection is everywhere; it is ubiquitous. What kind of data might Amazon collect about a user, for instance? When one speaks of ubiquitous computing, though, one usually means the computers and the network being everywhere rather than the data collection. Smart phones are a sign that computers are becoming ubiquitous since one can be online with them almost anywhere or anytime. Another way in which computers can be ubiquitous is in the field of sensor informatics. Sensors collect data, and when integrated with a computer, they can store this data or transmit it over a network. If sensors are ubiquitous, they will be sensors everywhere. What applications of this can you think of? Using ubiquitous hardware to solve problems with this computing power is informatics--sensor informatics. Wired has one sample application which may be viable soon.

Subscribe to:

Posts (Atom)